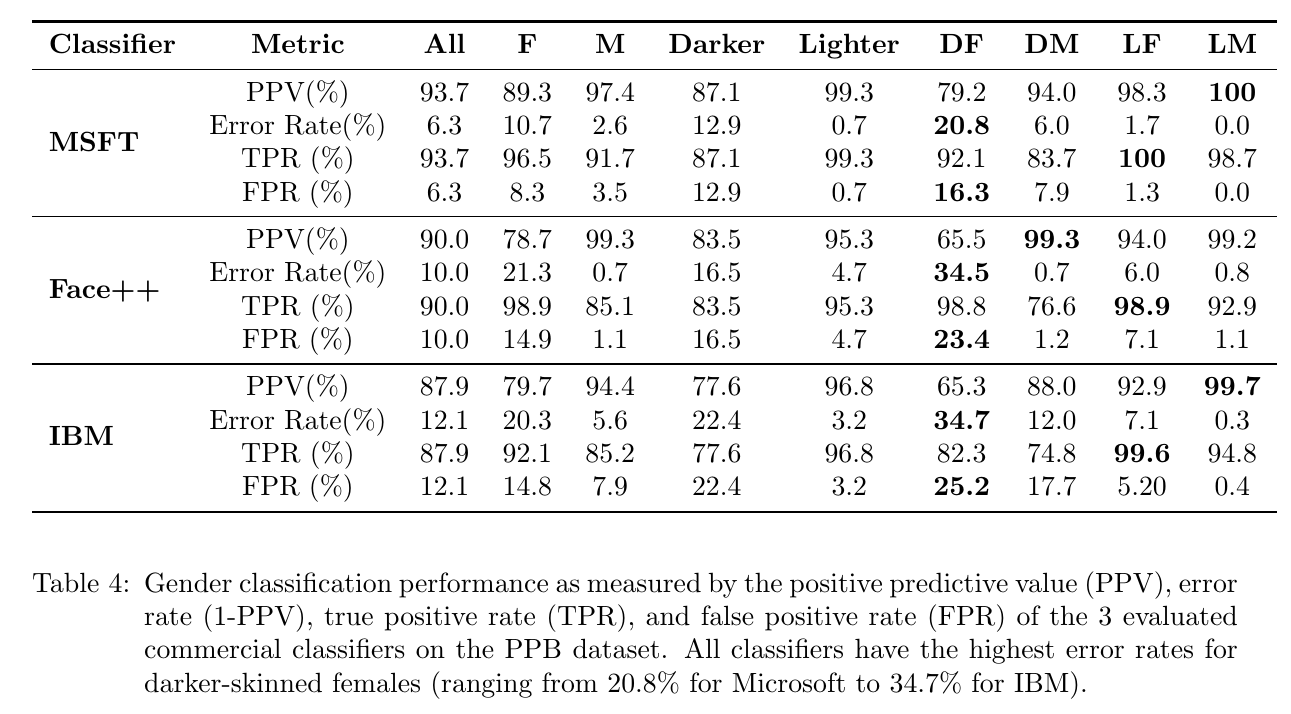

| independence | $\hY$ independent of $A$ | $\forall a, a', \hy, \;\;\;\;\;\;\;\; p(\hY=\hy|A=a) \;=\; p(\hY=\hy|A=a')$ | outcome proba indep(group/sensitive info) |

| separation | $\hY$ independent of $A$ when $|Y$ | $\forall a, a',y,\hy, \;\;\;\;\;p(\hY=\hy|A=a,Y=y) \;=\; p(\hY=\hy|A=a',Y=y)$ | $A$ doesn't influence distribution knowing skills : Equalized odds |

| sufficiency | $Y$ independent of $A$ when $|\hY$ | $\forall a, a',y,\hy, \;\;\;\;\; p(Y=y|A=a,\hY=\hy) \;=\; p(Y=y|A=a',\hY=\hy)$ | $A$ doesn't influence the error distribution $y|\hy$ |