| $\dd_t\, u(t,x) + L\, u(t,x) = 0 \;\;\;\; \forall t, x \in [0,T]\times\Omega$ | ||

| with constraints | $u(0,x) = u_0(x) \;\;\;\; \forall x \in \Omega\;\;\;$ | [initial condition] |

| and | $u(t,x) = g(t,x) \;\;\;\; \forall x \in [0,T] \times \dd\Omega\;\;\;$ | [boundary condition: control] |

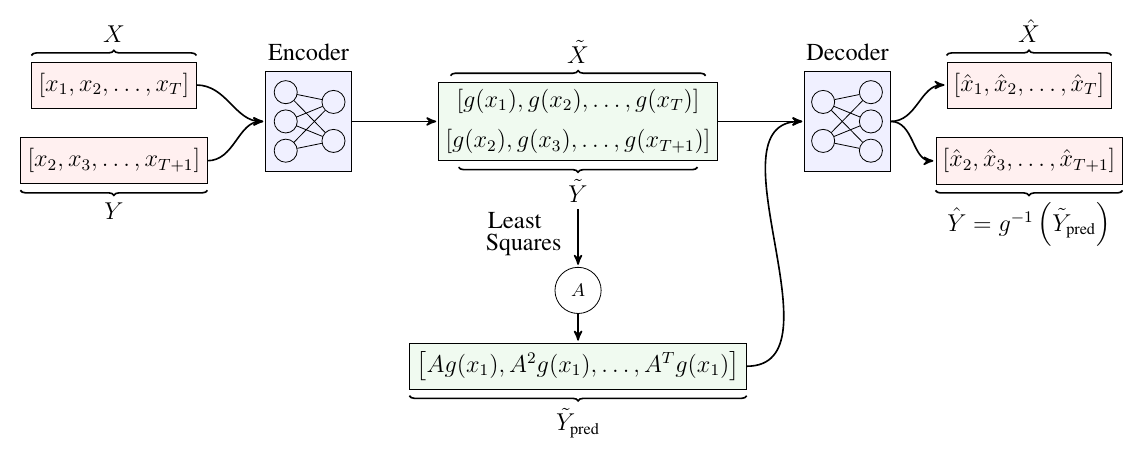

| $X \to X'$ |

| $Y \to Y'$ |

| $ X' \to X$ |

| $Y' \to Y$ |