Generative Theories of Interaction Generative Theories of Interaction

ACM Trans. Comput.-Hum. Interact., Vol. 28, No. 6, Article 45, Publication date: November 2021.

DOI: https://doi-org.ins2i.bib.cnrs.fr/10.1145/3468505

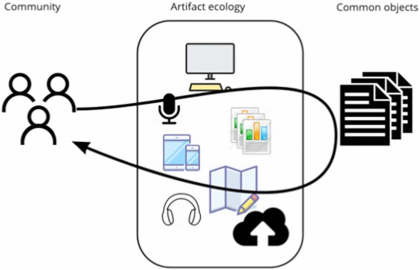

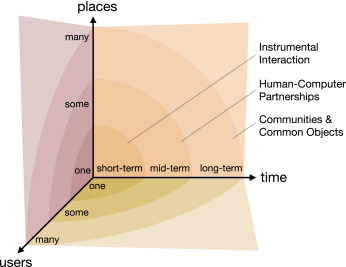

Although Human–Computer Interaction research has developed various theories and frameworks for analyzing new and existing interactive systems, few address the generation of novel technological solutions, and new technologies often lack theoretical foundations. We introduce Generative Theories of Interaction, which draw insights from empirical theories about human behavior in order to define specific concepts and actionable principles, which, in turn, serve as guidelines for analyzing, critiquing, and constructing new technological artifacts. After introducing and defining Generative Theories of Interaction, we present three detailed examples from our own work: Instrumental Interaction, Human–Computer Partnerships, and Communities & Common Objects. Each example describes the underlying scientific theory and how we derived and applied HCI-relevant concepts and principles to the design of innovative interactive technologies. Summary tables offer sample questions that help analyze existing technology with respect to a specific theory, critique both positive and negative aspects, and inspire new ideas for constructing novel interactive systems.

ACM Reference Format:

Michel Beaudouin-Lafon, Susanne Bødker, and Wendy E. Mackay. 2021. Generative Theories of Interaction. ACM Trans. Comput.-Hum. Interact. 28, 6, Article 45 (November 2021), 54 pages.

https://doi-org.ins2i.bib.cnrs.fr/10.1145/3468505

1 INTRODUCTION

Human–Computer Interaction (HCI) is a multi-disciplinary research discipline that draws from the natural sciences, which seek to explain naturally occurring phenomena; from the social sciences, which seek to explain human phenomena; from design, which seeks to explain how designs emerge to support human activity; and from engineering, which seeks to enable and guide the creation of innovative technology. HCI has produced a large body of such knowledge, including a deeper understanding of the behavioral phenomena involved in HCI; the creation of a variety of novel devices and interaction techniques; and extensive empirical know-how about the design process.

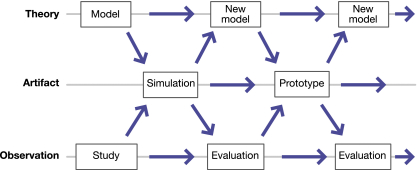

Mackay and Fayard [112] describe the relationship between theory and empirical studies in the natural sciences, where scientific research involves a back-and-forth cycle between theoretical constructs and empirical observation. They note that HCI research includes a third element—artifact design—making it what Simon [141] calls a “science of the artificial”. Figure 1 shows how a specific HCI research project may start at any level, whether theory development, artifact design, or empirical observation, and proceed along different paths through other types of research activity. Legitimate outcomes of HCI research may thus include new empirical discoveries, innovative interactive artifacts, or novel theory.

When we examine HCI theory from this perspective, we argue that a piece is missing: We lack generative theories that provide a direct link from empirically based theories in the natural and social sciences to HCI-specific constructs that suggest and inspire novel forms of HCI. This article introduces the concept of Generative Theories of Interaction, which provide analytical, critical, and constructive power for explaining existing interactive systems and inspiring new ones.

We engage with generative theory at multiple levels, from understanding human interaction with technology, to identifying HCI-specific concepts, to articulating generative principles that operationalize these concepts, and finally, to testing these theories in real-world applications. Our primary audience consists of HCI researchers who are interested in either building new theory or reframing existing theories in new and generative ways. We focus specifically on those HCI researchers who achieve this by investigating particular groups of users or exploring how to improve interactive systems. Although we do not specifically address design practitioners, we expect that Generative Theories of Interaction will help HCI researchers transmit their results by teaching students, who later become design practitioners and apply these concepts and principles in real-world settings.

This article first defines Generative Theories of Interaction, and explains how they draw inspiration from well-established scientific theories that they then apply to HCI-specific research questions. After positioning Generative Theories of Interaction with respect to related work, we introduce three specific generative theories of interaction and show how we applied their generative principles to HCI research questions, to produce new empirical findings, novel artifacts, and refined theory. We conclude with a discussion of the value of Generative Theories of Interaction and directions for future work.

2 GENERATIVE THEORIES OF INTERACTION

We view HCI research not so much as problem solving [77], but rather as generating insights and opening up new possibilities for design in the face of constantly changing technology. Critically, individual users’ interactions with technology are dynamic, and evolve as the user's practice, experience and specific, real-world context change over time [23, 31]. Since the user experience is never definitive, we need HCI theories, methods, and tools that embrace these dynamics, both to identify new design ideas and to create new artifacts that assume that interaction changes over time.

This resonates with a number of familiar perspectives in HCI, such as Schön's [137] reflective practitioner and activity theoretical HCI [11, 35], as well as Kaufman's [88] concept of the “adjacent possible” from biology. Johnson [85] generalized Kaufman's concept to address any generative process, describing it as “a kind of shadow future, hovering on the edges of the present state of things, a map of all the ways in which the present can reinvent itself ... [it] captures both the limits and the creative potential of change and innovation”. When applied to HCI research, each new insight about users or technological invention has the potential to enlarge the adjacent possible surrounding interactive systems, creating an ever-increasing design space of possibilities to explore.

2.1 Definition

We introduce Generative Theories of Interaction to encourage this exploration of the “adjacent possible”: Each generative theory of interaction builds upon ideas from empirically based scientific theory, and introduces HCI-specific concepts and actionable principles that, when applied to specific research questions, help generate new insights about users and inspire novel design directions. Each generative theory of interaction offers what Brown [41] calls a conceptual lens for understanding interaction within a specified scope, helping HCI researchers to conceptualize and articulate research questions, and to bridge the gap between HCI research and artifact design. The longer term goal is to help transform the ever-growing body of HCI knowledge into actionable principles that can be taught to students and practitioners, and productively guide the exploration and production of novel technological artifacts.

We define a Generative Theory of Interaction as a construct that is

- grounded in a theory of human activity and behavior with technology;

- amenable to analytical, critical, and constructive interpretation; and

- actionable through the theory's concepts and generative principles.

(1) Generative Theories of Interaction should not be based solely on intuition or anecdotal evidence, but grounded in descriptive, predictive, or prescriptive theories of human activity and behavior from the natural and social sciences, especially biology, experimental psychology, sociology, and anthropology. This helps ensure that we “stand on the shoulders of giants” [115] to build a relevant body of knowledge. Although a variety of theories provide suitable foundations, we particularly value theories that address the dynamic aspects of human behavior in relation to technology.

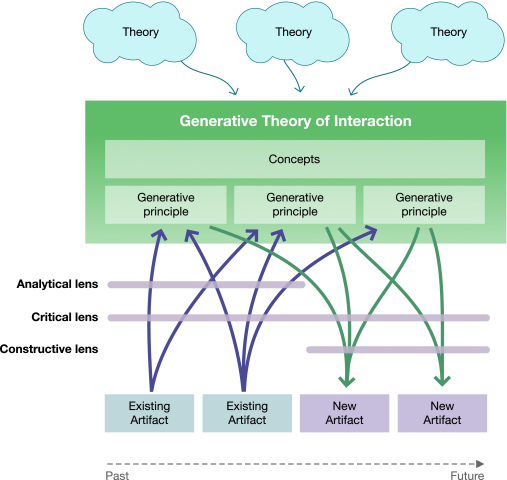

(2) Generative Theories of Interaction involve three successive lenses to help HCI researchers both understand current use of technology and suggest future interactive systems: The analytical lens provides a description of current use and practice; the critical lens assesses both the positive and negative aspects of a system given different needs and contexts of use, thus providing avenues for improvement or re-design; and the constructive lens inspires new ideas relative to the critique, expressed in terms of the generative theory's concepts and principles.

(3) The three lenses are enabled through the theory's concepts and principles. We do not intend generative theory to somehow automatically generate new insights or possibilities, e.g., with generative algorithms. Instead, each generative theory's concepts and principles provide tools that enable the principled assessment, exploration, and expansion of the design space related to a particular research problem and its possible solutions.

Figure 2 shows how concepts and generative principles associated with a particular generative theory of interaction enable analytical, critical, and constructive lenses for analyzing existing and new artifacts. Observations of the use of existing artifacts can also inspire new generative principles, which then inform the design of new artifacts.

2.2 Scope

Theory comes in many forms, with different emphases on concepts, principles or empirical grounding, and different goals with respect to precision, context, and generality [136]. HCI research includes a particularly wide variety of underlying theory, ranging from predictive Fitts’ law [60] analysis of pointing behavior, typically studied with lab-based hypothesis-testing experiments, to qualitative assessments of the human experience and technology in society, typically studied in the field. We borrow Mackay and Fayard's [112] characterization of scientific theories that involve an interplay between theoretical and empirical investigation. The associated research activities may include qualitative and/or quantitative evidence and result in descriptive, predictive, and/or prescriptive theory. As shown in Figure 1, they may start with empirical results that lead to testable theories or with theories subject to further empirical investigation.

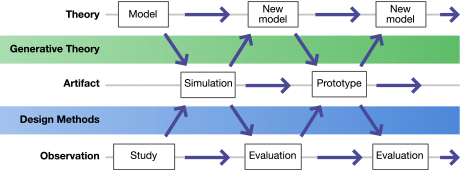

Figure 3, adapted from Mackay and Fayard [112], illustrates how generative theories of interaction link theory and artifact design (upper green area), just as HCI design methods [111] link artifact design and empirical observation (lower blue area). They encourage a change-oriented perspective by providing HCI researchers with conceptual tools for analyzing technologies in use or exploring novel future solutions. Although creating or refining Generative Theories of Interaction is clearly an activity best suited for HCI researchers, not practitioners, we are confident that individual generative theories of interaction can be applied productively to real-world design practice.

Instead of seeking universal generality, generative theories of interaction should be bounded in scope and address a clearly defined context or set of issues, at an intermediate level of abstraction. The emphasis here is on what might be called “micro” HCI research, whose goal is to produce specific outcomes within an interaction design process, as opposed to a more “macro” view that seeks broader explanations of human behavior and activity with technology.

Note that we do not define specific criteria for which theories are eligible to ground a generative theory of interaction, beyond ensuring that they are based on an empirical foundation that justifies their core concepts and principles. Nor do we claim that all HCI theories should be generative, or that all HCI designs should result from applying generative theory. While we believe that generative theories can consolidate HCI knowledge in useful ways, we also recognize the many other valid ways of conducting research and design in HCI. We cannot speak to the appropriateness of these other types of theory for creating Generative Theories of Interaction, but they clearly offer potential for future research.

3 RELATED WORK

HCI is a multi-disciplinary field that includes aspects of natural science, social science, design, and technology development, with correspondingly different approaches to theory. Neuman [120p. 30] describes theory as “a system of interconnected ideas that condense and organize knowledge”. However the term “theory” has multiple definitions that may vary across or even within research disciplines. For example, Abend [1] offers a nuanced analysis of seven distinct meanings of the term “theory” in Sociology, and the confusion this has caused. Here, we are explicitly interested in empirically based theories that involve qualitative and/or quantitative data. This makes the first three of Abend's theory definitions suitable: (1) establishing relationships between two or more variables; (2) explaining particular social phenomena; and (3) describing the meaning or significance of phenomena in the social world.

To bridge the resulting cross-disciplinary gaps, venues such as the flagship ACM/CHI conference ask authors to consider the relationship between empirical findings and technological novelty. Empirical contributions are encouraged to include “implications for design”, despite Dourish's [55] argument that few social scientists have the necessary technical or design background. Technical contributions are encouraged to demonstrate the effectiveness of the proposed technique or system, which is tricky when behavioral measures fall outside the standard experimental paradigm. Despite these issues, maintaining this ongoing tension between conceptually, empirically, and technically oriented papers has served the HCI community well.

A small percentage of the HCI literature proposes or discusses theory explicitly, with different approaches, motivations, means, and disciplinary perspectives. However, authors rarely try to explain why we need theory, and instead offer theory as a tool for categorizing and describing different aspects of HCI. We review two main strands of theorizing in HCI that relate to our approach, namely the development of theory to better understand how people interact with technology, and theory that supports the innovation process.

3.1 Theory Related to Understanding Human Interaction with Technology

Carroll [46] and Rogers [133] each describe and assess common theories in HCI research. Interestingly, the vast majority of these theories have their roots outside of HCI, such as applied perception, motor learning, distributed cognition, ecological psychology, ethnomethodology, and activity theory. However, several of these theories were developed in conjunction with ongoing HCI research. For example, qualitative descriptive theories, such as distributed cognition [78] and situated action [144, 145] were originally grounded in anthropology. However, each was developed in the context of HCI to challenge the disembodied nature of 1980’s cognitive science and what Suchman calls the “plans” that characterize rule-based artificial intelligence research. Similarly, activity-theoretic HCI [87] originally draws from activity theory, but continues as a key HCI theory [104] for describing human behavior with respect to technology. More quantitative theories include Card, Moran, and Newell's GOMS (goals-operators-methods-selection rules) model [44] and Appert's CIS (Complexity of Interaction Sequences) [5], both of which are framed in terms of causal inference, with methodology from experimental psychology to design controlled laboratory experiments and develop quantitative HCI-related theory.

Rogers [133] distinguishes between first generation theories that aim at being prescriptive by directly advising how to construct a user interface (p. 91), and newer, second-generation theories that do not share this aim. She suggests that we consider these theories in terms of their formative and generative power: to build overarching frameworks for HCI is to provide a set of concepts “from which to think about the design and use of interactive systems”. The idea is to “stimulate new ideas, concepts, and solutions. In this sense, the theory can serve both formative and generative roles in design” (p. 121). We agree with Rogers’ assessment that the formative and generative roles of theory are fundamentally different, and further, we suggest explicit strategies for creating generative theory.

Gergen [65] also argues in favor of generative theory that “can provoke debate, transform social reality, and ultimately serve to reorder social conduct”. This is a wider and more radical way of thinking about generative theory than Rogers’ more design-oriented approach, but nonetheless equally relevant, because it suggests that generative theory should not only help practitioners be creative, but also reframe research accordingly. Gergen, who coined the term “social constructivism” in psychology, belongs to a group of authors, including Shotter [140] and Garfinkel [64], who were inspired by Wittgenstein to consider a generative stance. Their work connects this perspective with foundational work in HCI, e.g., Ehn [57]. Gergen further suggests that we “consider competing theoretical accounts in terms of their generative capacity, that is, the capacity to challenge the guiding assumptions of the culture, to raise fundamental questions regarding contemporary social life, to foster reconsideration of that which is ‘taken for granted’, and thereby to furnish new alternatives for social action”. This opens the possibility of generative engagement with one theory, as well as using and comparing the generative potential of parallel or competing theories. Like Gergen, our goal is to reconsider that which is “taken for granted” and “thereby to furnish new alternatives”, although at a different scale than social and cultural theories.

As suggested by Rogers [133, 134] and Shneiderman [139], generative theories focus on enhancing creativity in interaction design by employing grounded concepts, methods, and principles. They also inform technological alternatives both theoretically and empirically, thus leading to more principled, coherent designs.

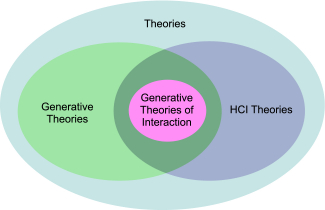

Compared with previous uses of generative theory in HCI, Generative Theories of Interaction feature an explicit link with their existing grounding theories, and include explicit concepts and generative principles that can be applied to specific research questions. They thus constitute a well-defined subset of the generative theories as defined by Rogers [133] (see Figure 4).

3.2 Theory Related to the Innovation Process

A key role for generative theories is to help spark innovation and suggest new directions for designs that better meet the needs of users in different contexts. Gaines [63] proposes the BRETAM model of the information systems innovation process: After an initial Breakthrough that changes how we think of technology, other researchers Replicate or slightly modify the ideas as point designs. Next comes Empricism, where the community codifies the work into lessons learned and design rules. This makes it possible to generate Theory, with causal reasoning and testable hypotheses, followed by Automation, as theories are accepted, and Maturity when the theories are in widespread use. Greenberg [68] argues that HCI has begun moving along Gaines's BRETAM trajectory, but that many areas are “throttled at the replication stage”, minimizing their impact on product invention and innovation.

Even so, a few HCI areas have moved further along Gaines's trajectory, such as Wellner's Digital Desk [153], a Breakthrough demonstration of how to seamlessly link digital information and physical paper. This inspired research in multiple research areas, including tangible computing, mixed and augmented reality, for which it received ACM/UIST's first lasting impact award. Various researchers Replicated the digital desk or explored variations. As the necessary technology decreased in size and increased in power, new opportunities arose for “intelligent” objects and “wearables” and mixed physical-virtual environments, which Mackay [112] characterizes as points within a design space where technology can augment the user, the object, or the environment or any combination of the three (Empiricism). Early formulations of Theory in that space include Ishii and Ullmer's Tangible Bits [80], which capture what is now referred to as tangible computing. However, such theories remain rare and are not at the stage where they are Automated or universally accepted, much less sufficiently Mature for widespread use by practitioners.

Kostakos [96] analyzes the HCI literature in terms of “motor themes”, and shows how researchers who publish at CHI conferences rarely focus on a particular topic to advance it. Instead, they move from topic to topic without further substantial development. HCI as a field arguably overvalues Breakthroughs, with little reward for Replication and Empiricism, much less Theory. Unfortunately, Kostakos [96] offers little help in framing and motivating these motor themes and theoretical contributions. Kostakos’ analysis led Oulasvirta and Hornbæk [127] to view HCI research as problem solving, offering an interesting take on how empirical, constructive, and conceptual research (should) play together as categories in HCI research:

Conceptual problems are non-empirical; they involve issues in theory development in the most general sense [...] Conceptual problems might involve difficulties in explaining empirical phenomena, nagging issues in models of interaction, or seeming conflicts between certain principles of design [...] Work on a conceptual research problem is aimed at explaining previously unconnected phenomena occurring in interaction. (Oulasvirta and Hornbæk [127p. 4958])

Following this work, Hornbæk and Oulasvirta [77] define the term “interaction” by examining seven key concepts that highlight how conceptual research has developed in HCI over the past few decades. They define the “constructive power” of an HCI theory as “the scope, validity, and practical relevance of the counterfactual reasoning it permits that links the conditions of interaction and its events” [77p. 5048]. While this is more restrictive than our notion of a constructive lens in generative theories of interaction, we share their concern that over-emphasizing empirical and constructive research at the expense of conceptual research contributes to the paucity of abstraction and theory in the HCI literature, and agree that combining conceptual with empirical and constructive research is needed to advance the field.

Another perspective is Höök and Löwgren's [76] “strong concepts”, which generate intermediate-level knowledge and play a direct role in the creation of new designs. Strong concepts act as only one of several possible intermediate-level forms of knowledge that can emerge from design-oriented HCI research. Others include HCI-specific design patterns [53] from the software engineering literature. We see these contributions as possible building blocks for the concepts and principles of future generative theories of interaction.

With a focus on creating building blocks, Bødker [22] offers a conceptual toolbox based on situation concepts and technological constructs that transfer the lessons learned from two large European research projects to inform specific designs, provoke ideas during the design process, and encourage change. With a similar focus on making change, and based on extensive interviews with HCI researchers and designers, Zimmerman et al. [158] propose four criteria for evaluating interaction design research contributions: process, invention, relevance, and extensibility. Unlike the quest for validity in the behavioral sciences, they argue that interaction designers should demonstrate how their contribution relates to and improves upon the current state-of-the-art and how others can leverage the knowledge gained, similar to the replication and experience stages of Gaines's BRETAM model [63]. Although these and similar efforts suggest useful generalizations from design work, they offer few clues as to how other interaction designers might actually accomplish these goals. Therefore we do not consider them “actionable” in our definition of a Generative Theory of Interaction.

By contrast, Lottridge and Mackay's [107] “generative walkthroughs”, which link socio-technical principles directly to story-based design prototypes, explicitly bridge the gap between theory and design. After learning principles such as distributed cognition and situated action, designers systematically critique each step of a storyboard with respect to each principle, and then apply those principles to inspire new design alternatives. This is closest to our approach, but lacks the transition between high-level external theory and HCI-specific theory.

In summary, Generative Theories of Interaction explicitly target the innovation process in a research setting. Compared with the work outlined above, they strive to operationalize theoretical constructs into concepts and generative principles that can then be applied to specific research questions, providing both a path from theory to artifact and a principled method for exploring the research design space.

4 THREE EXAMPLES OF GENERATIVE THEORIES OF INTERACTION

In order to illustrate the notion of Generative Theory of Interaction, we present three examples are drawn from research carried out by the three co-authors over many years. Each follows a common structure that begins by briefly introducing a specific generative theory of interaction and its theoretical foundations, and explains how it influenced the core HCI concepts. We then present a set of actionable generative principles for analyzing and critiquing existing interactive technologies, showing how they lead to new insights about users and generate ideas for innovative interactive systems that address these critiques. Each project began with observation of or interviews with target users to better understand the initial design problem. We then systematically applied the principles from the associated generative theory of interaction to inspire new technology designs, which we evaluated relative to the users’ needs.

All three examples are based on our common interest in a particular phenomenon in HCI, namely understanding artifacts as being created by human beings with future use in mind and used by people to achieve certain goals. The artifact therefore plays the role of a tool or mediator among people involved in the activity. In HCI, the tool perspective dates back to the 1980s and the extensive discussion on automation and Artificial Intelligence that took place at the time. For example, Weizenbaum [152] analyzed computing as a means, not an end for human beings, whereas Winograd and Flores [154] identified the fundamental role of tools in human action, and how humans generate the world by developing both tools and their use, emphasizing the importance of breakdowns as sources of learning and the shift between tool and objects. Ehn and Kyng [58] and Göranzon [67] promoted skill as an essential quality in developing the tool perspective, pointing out that human tool use does not happen in isolation but is situated in human practices and materials. Several authors were inspired by Heidegger's discussions of how the tool, in use, extends the human body [74] and the dialectics between the tool “ready-at-hand”, i.e., that extends the hand, vs. “present-at-hand”, i.e., being the object of interest, developing over time in the hermeneutic circle. While the three example theories presented here share these roots, they are in no way a defining feature of Generative Theories of Interaction.

5 EXAMPLE 1: INSTRUMENTAL INTERACTION

The first example targets traditional desktop interfaces and focuses on the design of more efficient and powerful interaction techniques for graphical user interfaces (GUIs). The GUI was created in the late seventies [84] to help executive secretaries carry out office tasks. After 40 years, computers come in many forms and are used for a wide range of tasks by a wide variety of users, yet the most common interfaces are still based on applications, documents, files, and folders, with the same menus, buttons, and dialog boxes and the same input devices as the early systems. GUIs are obviously limited in the face of the new challenges of ever larger and more complex data and the wide range of uses and users of computers.

GUIs are based on the principles of direct manipulation [79, 138] where physical actions on visual representations of the objects of interest replace the text-based commands of earlier command-line interfaces. However, direct actions in current GUIs are typically limited to selecting content, e.g., with a click or a tap, and moving it, with a drag. More complex commands use widgets such as menus, scrollbars, and dialog boxes that break the fundamental principle of “direct manipulation of the object of interest” [138]. They enact a form of mediated interaction where the user's actions are transformed and interpreted before being applied to the target object. Some techniques, such as dialog boxes, create a strong decoupling between the users’ actions and their effects, while others, such as the use of tools in a tool palette, resemble the familiar use of physical tools, such as using a pencil or a brush to draw or paint.

Instrumental Interaction [13] was borne out of the observation that our interactions in the physical world are also often mediated by tools, devices, and instruments, and are rarely direct. The goal of Instrumental Interaction is therefore to acknowledge this mediated interaction in the digital world and to give the mediators, called instruments, the status of first-class objects in the interface.

5.1 Theoretical Foundations

While humans are not the only species that use tools, they are unique in their ability to actively and intensively create new ones. Evidence of tool use by early humans dates back at least 3.3 million years [72], including traces of older adults training younger ones to create and use tools. Throughout human history all the way to modern life, human activity has been mediated by physical tools that extend and enhance our capabilities, or simply make life easier. Surprisingly, relatively few studies of human tool use can be found in the Psychology and HCI literature.

5.1.1 Human Tool Use in Psychology. In his theory of affordances, Gibson [66] mentions tools only briefly but makes a key observation: “When in use, a tool is a sort of extension of the hand, almost an attachment to it or a part of the user's own body, and thus is no longer a part of the environment of the user” [66p. 40]. For Gibson, “The affordances of the environment are what it offers the animal, what it provides or furnishes, either for good or ill” [66p. 127, emphasis by the author]. Holding a tool thus redefines the affordances of the environment, since it changes the person's capabilities. Recent work supports this theory that a tool becomes an extension of the body schema, as if it were an integral part of the body [89]. This property provides a psychologically and cognitively sound basis for creating extensible systems, where new digital tools reveal new affordances.

Cognitive scientists have also studied human tool use to understand the cognitive processes at play when selecting and using tools. Osiurak et al. [126] contrast two competing views of tool use. The computational approach states that perceived properties of the tool are translated into physical actions for use, whereas the ecological approach states that the affordances of the tool (what can be done with it) are perceived directly. More recently, Osiurak [125] reports on experiments with patients suffering from several forms of apraxia (action disorganization syndrome) that suggest that tool use involves “technical reasoning”, a specific type of reasoning that complements the direct perception of what the tool can do.

Technical reasoning is based on abstract technical laws, which, unlike skills acquired through procedural learning, do not require constant reinforcement. For example, people do not forget how to ride a bike, even after many years without practice, but commonly forget simple procedures, such as changing the time on a digital clock. Technical reasoning relies on identifying the technical properties of objects and their appropriate combination to perform a task and reach the desired goal. It explains unanticipated uses of objects as tools, such as using a knife to undo a screw when a screwdriver is not available. Both procedural learning and technical reasoning are clearly useful in everyday life. However, given that current computer systems are heavily based on procedural learning, we focus on the ability of technical reasoning to enable appropriation.

This difference between procedural learning and technical reasoning can be a powerful basis for creating (digital) tools that are more “natural”, in the sense that they do not need constant re-learning. An interesting and open research question is to identify which types of digital tools, if any, elicit technical reasoning vs. procedural learning. A sensible hypothesis is that the digital tools found in tool palettes are more likely to involve technical reasoning while the use of menus and dialog boxes seem more likely to involve procedural learning.

5.1.2 Human Tool Use in HCI. As mentioned in the previous section, the tool perspective dates back to the 1980s with the works of, e.g., Weizenbaum [152], Winograd and Flores [154], and Ehn [57]. Ehn and Kyng [58] and Göranzon [67] see human craftmanship and skill as essential qualities in developing the tool perspective, pointing out that human tool use does not happen in isolation but is situated in human practices and materials. Surprisingly however, the role of tools in human-artifact interaction is absent from seminal works in HCI such as Card, Moran and Newell [44], Suchman [145], or Norman [123].

In their taxonomy of the concept of interaction, Oulasvirta and Hornbaek [77] include tool use as one of seven core conceptions of interaction. Indeed, several authors have focused on the mediating role of tools (or instruments) in the relation between human users and their objects of interest, addressing issues such as in-directness. Bødker [22] emphasizes how tool use is embedded in human practice, with individual and cultural/collaborative sides that mirror each other. Rabardel [130, 131] analyzes the genesis of instruments as either directed toward the subject (“instrumentation”) or toward the artifact (“instrumentalization”). Beaudouin-Lafon [13, 14] argues for the design of interaction models that can be used to describe, evaluate, and generate interaction styles and techniques, and introduces Instrumental Interaction, a model based on explicit digital tools.

In summary, while human tool use has not been a mainstream topic of research in Psychology nor HCI, it brings an enlightening perspective on the role of mediating artifacts in our interaction with both the physical and digital worlds.

5.2 Concepts of Instrumental Interaction

The main concept of Instrumental Interaction [13] is that interaction with digital content should be mediated by digital tools called instruments that operate on the objects of interest. In line with the above theories of human tool use, we define instruments as objects that can be manipulated by the user to affect other objects. In order to elicit technical reasoning, and therefore user appropriation, instruments must have clear technical properties that can be combined with those of target objects. In the same way that a pen can write on different types of surfaces, even those it was not necessarily designed for, such as a wall, a digital pen should work with different types of content.

A number of current computer interfaces feature “tools”, usually grouped into tool palettes: The user selects a particular tool and can then apply it to the object(s) of interest. These tools do not meet the above definition of instruments, though, because they typically only work with pre-defined types of objects and are trapped inside their applications.

Consider a simple, ubiquitous digital tool in current interfaces: the color picker (Figure 5). Color pickers vary within a single application, e.g., text vs. highlighting in Microsoft Word; within a single suite, e.g., Microsoft Word vs. Excel; and across suites, e.g., Microsoft Office vs. Adobe Creative Suite. Users must constantly adapt, since the Adobe Photoshop selector cannot be used in Microsoft Word, nor vice versa, and the text coloring tool cannot be used to pick a highlight color. The only way to copy a color across applications is through a separate tool, e.g., the eyedropper. Colors cannot be applied to objects that were not designed to be user-colorable, e.g., the window frame or document background. Color pickers often feature color palettes and color swatches, but these are rarely first-class objects that can be saved, loaded, exchanged, or attached to a document. Conversely, a user cannot designate an existing object as a color swatch, turning it into a tool. Finally, in multi-device environments, color pickers cannot be used collaboratively or migrated to a handheld device, e.g., to adjust color on a wall-sized display from a handheld touch tablet. In fact, current color pickers do not address the needs of professionals who use color for their everyday work [82].

Instrumental Interaction challenges current digital tool design by separating instruments from the information or documents they operate on. The goal is to both leverage our everyday skills in interacting with the physical world through tools and make the digital world more flexible by letting users choose the tools they want to use, create their own tools, and compose their own digital environments. Instrumental Interaction is grounded in the theories outlined in the previous section, in particular the notion of tool as mediating artifact, as extension of the body, and as construct that turns an object into an agent for action.

The generative power of Instrumental Interaction can be readily illustrated with the above example of the color picker: in an instrumental interface, any color picker could be used with any content that has color properties, both to extract and apply color to objects; existing objects could be turned into a color picker, such as a photo or the color histogram extracted from it, as in Histomages [49]; color swatches would be first-class objects that can be moved, resized, and combined to assess color in context, as in ColorLab [82].

We now introduce the generative principles that we developed for Instrumental Interaction, and illustrate their analytical, critical, and constructive power with two examples.

5.3 Generative Principles of Instrumental Interaction

We introduced the three principles of Instrumental Interaction, Reification, Polymorphism, and Reuse, in 2000 [17], together with Instrumental Interaction itself [13], and have since then applied them extensively.

5.3.1 Reification. The key principle of Instrumental Interaction is Reification: An instrument reifies an abstract command or concept, i.e., it embodies it into an object that the user can interact with to manipulate a target object. For example, a scrollbar reifies the action of navigating a document by mediating the user's actions: The user acts upon the scrollbar by moving the thumb, which acts upon the document, changing which part of the document is displayed in the window.

Reification is the fundamental generative principle for creating new instruments: When confronted with the problem of providing a given functionality, designers can imagine an instrument that reifies it, in order to make it concrete for the user. This contrasts with the traditional approach where a new functionality is typically bound to a new menu item that acts as a “magic word” to invoke the functionality, typically forcing procedural learning of which command does what and when it is available. By contrast, a “properly designed” instrument leverages technical reasoning: The tool should have a technical effect that makes it more understandable and memorable than the mere arbitrary mapping between a menu entry and a command.

Of course, not every reification of a command into an instrument will be successful. Applying the principle still requires skill, creativity, luck, and the patience to iterate. Nevertheless, as we will show in the next sections, Reification has been instrumental (so to speak) in helping us design interfaces that are both more powerful yet simpler to learn and operate than their non-instrumental counterparts.

5.3.2 Polymorphism. Reification generates new instruments, calling for an additional principle to control the potential explosion of the number of instruments in the environment. The second generative principle, Polymorphism, helps create instruments that can be applied to objects of different types.

For example, a coloring instrument can work with different types of objects, and even different parts of objects. Many drawing tools have a different command to change the border color vs. the fill color of an object, and the color of text vs. the color of text highlighting. A single coloring instrument can replace these commands, by acting differently depending upon whether it is applied to the border or interior of a graphical object, to text, or to a highlighting brush.

As in programming languages, Polymorphism can be used for better or for worse. To be successful, a polymorphic instrument must have some internal consistency that makes its behavior with different types of objects predictable. Here too, we expect to leverage technical reasoning: “I want to change the color of a folder in the file explorer, I should be able to use the same color tool as in my text editor”, rather than procedural learning: “I have to remember that to change the background color of the document I need to go to the document settings”.

5.3.3 Reuse. The third generative principle of Instrumental Interaction is Reuse: the ability for users to reuse previous actions (Input Reuse) or the results of these actions (Output Reuse). This principle is based on the observation that computer tasks are often repetitive and users prefer starting from existing content and modifying it rather than starting from scratch. Historically, many physical tools have been created to facilitate reuse and make repetitive tasks more efficient. For example, pins were replaced by staples to bind documents, and then paper clips were introduced to facilitate reuse and to easily unbind documents without damage [128].

Output Reuse is already present in many interfaces through the “copy-paste” and “duplicate” commands. However, designing with Output Reuse in mind can help extend the power of these commands, for example with the ability to copy the tools themselves in order to create variants, e.g., brushes with different patterns. Similarly, Input Reuse is often present in the form of a “redo” command, and sometimes with the ability to access and selectively re-execute past actions. Combining Input Reuse with Reification leads to creating instruments that embody a sequence of past actions, empowering users to create their own tools through use.

5.3.4 Power in Combination. Beyond their individual generative power, the three principles are even more powerful in combination: Reification creates more objects that can be targeted by Reuse, Polymorphism expands the types of objects that can be targeted by a given instrument, including groups of heterogeneous objects, and Reuse facilitates the creation of new objects and instruments by end users.

We now illustrate how these principles directly guided the design of novel interaction techniques in two recent research projects. We describe the analytical, critical, and generative power of Instrumental Interaction with two examples drawn from our own research: StickyLines [51], for graphical editing, and Textlets [71], for text editing.

5.4 Applying the Generative Principles: STICKYLINES

StickyLines [51] addresses the issue of aligning and distributing graphical objects. It refines and extends the notion of magnetic guidelines, which arose in the context of applying these principles to the design of the CPN/Tools editor and simulator for Colored Petri Nets (CPNs) [16, 18]. StickyLines is the result of participatory design workshops with graphic designers and users of tools such as Adobe Illustrator, where we used the generative principles to address their specific needs.

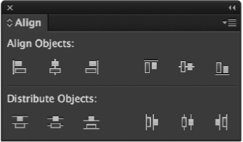

5.4.1 Analytical and Critical Power. Our studies of users of CPNs showed that they use graphical alignment extensively to create diagrams that are both visually pleasing and semantically meaningful. The tool they used, Design/CPN, featured alignment commands similar to most graphical tools (Figure 6): a set of menu items or buttons in a tool palette to align the selected objects horizontally or vertically, along their centers or one of their sides.

The fact that these commands are not reified into a manipulable object means that users spend up to 25% of their time aligning and re-aligning objects, or selecting a group of aligned objects before moving them together so as to keep them aligned. While Design/CPN, like most graphical tools, let them group a set of objects to manipulate them as a single object, they seldom used this feature because many objects are part of multiple, overlapping alignments. We observed similar issues with professional designers using tools such as Adobe Illustrator. Moreover, they also often needed to adjust the alignment or distribution of shapes to be visually correct: When aligning logos, for example, the visual center is rarely the geometric center of the bounding box. Similarly, when distributing objects, the visual size is often not the bounding box of the object. Graphic designers therefore routinely adjust alignment and spacing manually. Unfortunately, the system does not keep track of these adjustments, which users need to perform again every time they re-align or re-distribute the objects.

The alignment commands of tools such as Design/CPN and Adobe Illustrator are also insufficiently polymorphic. While they can be used to align different types of shapes, they are limited to horizontal and vertical alignments. Some Petri Nets and graphical designs lend themselves to, e.g., circular layouts, but users can only create those coarsely, by hand. Most tools also do not support aligning the control points of polylines or curves, but only the entire object. According to the principle of Polymorphism, the “align” and “distribute” commands should be applicable to any object with a position and size and to any shape, not just horizontal and vertical lines.

Finally, the align and distribute commands result in poor Reuse. For example, it is not possible to extract the alignment and/or distribution properties of a set of objects to apply them to another set.

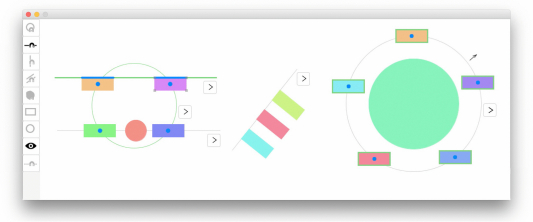

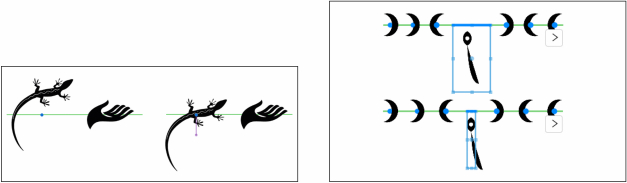

5.4.2 Constructive Power. StickyLines is the result of applying Reification to the align command: A magnetic guideline is an object that can be created, moved, and deleted like any other object in the diagram.1 The user can attach an object to the guideline by dragging the object and snapping it to the guideline, and can detach an object by dragging it away from the guideline (Figure 7). Crucially, the objects attached to a guideline move with it when the guideline itself is moved, maintaining the alignment.

StickyLines also supports the even distribution of objects along a guideline: When objects are added to or removed from it, or when the endpoints of the guideline are moved, the objects move along the StickyLine so that they stay evenly distributed. Finally, StickyLines reifies the concept of adjusting the alignment of an object: When using the arrow keys to move an object attached to a StickyLine, a purple line linking the object to the line, called a “tweak”, shows the visual offset applied to the object (Figure 8, left). The object is still attached to the line, and moves with it. The tweak itself is an object (Reification) that can be selected, copied and pasted (Reuse), or deleted. The same principle applies to the bounding box of an object when distributing objects (Polymorphism): The box can be made visible and directly modified, changing the system's notion of the object's bounding box and therefore altering the distribution accordingly (Figure 8, right).

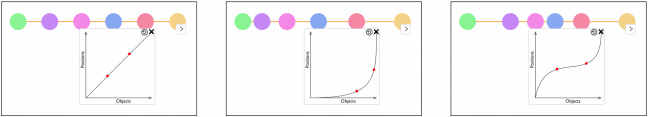

Another use of Reification is the ability to change the distribution function that maps the rank of objects on a guideline into their position. StickyLines can show this mapping as a curve, which can be edited to create a layout where objects are progressively closer or further away from each other (Figure 9).

StickyLines support Polymorphism by allowing any object to be attached to them, including geometric shapes, text, and the corners of multi-segment lines and arrows. In addition, any shape can be turned into a guideline, including shapes that are part of the diagram itself (Figure 7, right). We also found it useful to let guidelines be the target of other commands, such as setting the fill or border color of shapes. Rather than applying these attributes to the guideline itself, it applies them to each shape attached to the guideline, as if they were a group.

Since guidelines and tweaks are objects that can be directly manipulable by the users, they naturally support Reuse, such as copy-paste and duplicate. Copying a guideline copies the objects attached to it, but it is also possible to copy just the guideline, with its attribute. This is particularly useful when the guideline is used for distribution, e.g., to copy the distribution function.

We found that StickyLines blur the distinction between tools and objects of interest: A StickyLine is used as a tool when the user moves it to move the aligned objects, or when it “grabs” objects as the user moves and releases the guideline over them; but it is also the object of interest when the user snaps an object onto it, or when a new StickyLine is created automatically when an object alignment is detected. A StickyLine is therefore not just an object “ready-at-hand” that works only when explicitly manipulated, it is an object “present-at-hand” that affects the behavior of other objects as they come near it.

Our performance evaluation of StickyLines showed that it was up to 40% faster than traditional align and distribute commands [51]. This is due mainly to the fact that StickyLines keep objects aligned, and that users can manipulate aligned objects as groups even when individual objects are attached to multiple StickyLines.

We also observed that graphic designers used StickyLines in creative and unexpected ways. While tweaks were intended as small adjustments to an object's position, we observed some users creating giant tweaks so that they could move a set of non-aligned objects together by moving the guideline, reifying the notion of a group. A user also asked if the concept of distribution could be generalized to 2D, to automatically lay out (and tweak) objects within an area instead of along a line. We also observed that Petri Net designers started to create guidelines before creating the diagram itself, as a way to plan the overall layout. This shows how the generative principles not only help generate creative and powerful solutions, but also how they inspire users to take them even further. It also provides evidence that users were employing technical reasoning to appropriate the properties of the guidelines in unexpected ways.

5.5 Applying the Generative Principles: TEXTLETS

Text editing is a basic feature of many interactive systems, and word processors are one of the most heavily used applications. Yet text editing has not changed very much over the past decades. In the context of the European Research Council project ONE (Unified Principles of Interaction), we interviewed two groups of professional users who rely on word processors for their work, namely contract lawyers and patent lawyers, to study how they manage the internal constraints and relationships that are critical to these documents, such as using the proper vocabulary consistently, meeting constraints such as word length, or maintain consistency between the claims and the body of a patent [71].

5.5.1 Analytical and Critical Power. We found that while all participants used Microsoft Word extensively, they did not use built-in features that could address their needs, even if they knew these features. For example, many participants do not use global search and replace because they cannot check its effects easily, and they hesitate to use incremental search and replace through fear of missing a change. Some prefer numbering items in a contract by hand, even if it also means renumbering them and their references by hand every time an article is added, moved, and removed. They rarely use styles, as these tend to pollute documents as they circulate among users, and they often manage versions and variants by hand, e.g., by using colored text rather than Word's review mode.

Our interviews showed that users prefer these manual operations because it gives them a sense of control. The fact that the effect of Microsoft Word commands such as search-and-replace are not directly visible and editable, i.e., reified into objects that they can inspect and revise, makes them wary that “it did not do the right thing”, with possible dire legal consequences. They find that checking that the command worked is more tedious than performing the changes manually.

The lack of Polymorphism for Microsoft Word features that are seemingly identical, such as numbering list items, section titles, references, and footnotes leads to cognitive overload: Users must rely on procedural learning rather than technical reasoning as each command works differently and has different settings.

Finally, the lack of Reification limits Reuse. For example, styles are not first-class objects that can be directly copied and pasted, e.g., across documents. The participants in our study sometimes create their own templates, and use copy-paste extensively, but fight with the side effects such as “style pollution”.

5.5.2 Constructive Power. In order to address some of these issues, we applied Reification to the most common operation in text editing after typing text: selection. In current text editors, selection is transient: The user selects some text, invokes a command that affects the text, e.g., turns it bold, and the selection is lost as soon as the user clicks elsewhere. By reifying text selection into a first-class object, called a textlet [71], we turn the transient selection into a persistent object that users can return to at any time. Textlets are highlighted in the document and displayed in a side panel. Clicking a textlet reselects the associated text.

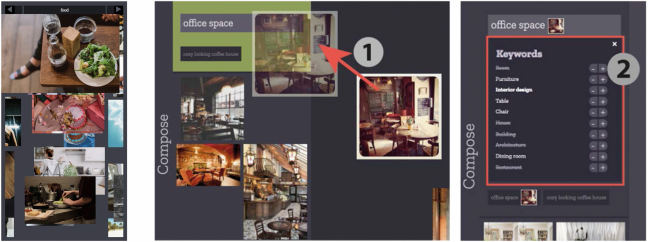

The power of Textlets derives from the ability to assign behaviors to them. For example, the word count behavior adds a counter that displays the number of words in the textlet in real time, as the content of the textlet is edited (Figure 10). Similarly, a variant behavior lets users record multiple alternatives for the content of the textlet, and pick the one being displayed. A more sophisticated behavior is search-and-replace (Figure 11): A search textlet dynamically creates textlets for each occurrence of the search text and lets users replace occurrences one by one, in the order they want, or all at once. Replaced occurrences are displayed in a different color, and the user can undo and redo any change. Another example is numbering (Figure 12), which creates automatically numbered textlets according to a pattern defined by the user; each such textlet can then manage references to it as separate textlets.

Behaviors represent a different form of Reification than that of StickyLines or the basic textlet introduced above. Rather than turning a command into an object, they turn a command into a dependency that is re-evaluated and updated when the content of the surrounding document changes, as in a spreadsheet. Attaching the command to the textlet makes it more concrete, observable, and manipulable. For example, a word-counting behavior turns a textlet into a real-time word-counting instrument.

Behaviors make textlets Polymorphic: Different behaviors can be attached to a textlet, possibly in combination. For example, combining the variant and word counting behaviors lets users try alternatives of, e.g., an abstract limited to 150 words, and see the word count in real time as they edit the abstract and switch between the alternatives. At a more abstract level, the notion of textlet as Reification of selection is polymorphic in that it can be applied to other types of content than text, e.g., the graphical shapes of a drawing editor or the file icons of a file manager.

Textlets support Reuse at a very basic level: By remembering the transient state of a selection, the user can instantly re-select the text by clicking the textlet (Input Reuse). At a higher level, textlets and their attached behaviors can be copied and pasted, and attached to a different selection (Output Reuse). Turning transient selections into persistent objects also makes it possible to, e.g., manage multiple simultaneous searches whereas the traditional approach only supports one search string at a time. This makes it easy to reuse a previous search.

The participants in our study found Textlets particularly easy to use and efficient for their tasks, giving them a sense of control and appropriate feedback on their actions [71]. As with StickyLines, we observed appropriation of Textlets by users. For example, some participants created search textlets for forbidden words, to track words that they had decided should not be used, e.g., in a patent. Typing such a word would immediately highlight it, warning the user in a non-obtrusive way. We also brainstormed many other behaviors that could be attached to textlets, such as indexing words for a glossary, creating spreadsheet-like formulas for calculated text, hiding, summarizing or translating text, and so on.

5.6 Discussion

The original article [13] introduced Instrumental Interaction as an interaction model and demonstrated its descriptive, evaluative, and generative power, similar to the analytical, critical, and constructive dimensions we are using in this article. The followup article [17] introduced the three principles of Reification, Polymorphism, and Reuse. However, the formal articulation of the link between the notion of instrument and the theoretical concepts of affordance and technical reasoning was made several years after these articles and remains an ongoing research topic.

Since these original papers, we have used Instrumental Interaction in many projects and for teaching. Students have applied the generative principles successfully in many applications, such as reifying paths in map applications, reifying layout in presentation software, or reifying channels in communication applications [69]. Instrumental Interaction has also been used beyond desktop interfaces, including touch-based interfaces [42, 155], multi-surface/multi-device interaction [90], collaborative systems [91], and tangible interfaces [83]. These examples demonstrate Instrumental Interaction's ability to suggest novel and powerful techniques. Table 1 summarizes questions to ask and issues to address, analytically, critically, and constructively, to apply the concepts and principles of instrumental interaction.

| Instrumental Interaction | Analytical | Critical | Constructive |

|---|---|---|---|

| Concepts | |||

| Object of Interest | What are the objects visible and directly manipulable by the user? | Do these objects match those of the users’ mental models? | Are there other objects of interest, e.g., styles in a text editor? Should some objects of interest turned into instruments? |

| Instrument/Tool | What functions are available as tools, e.g., in tool palettes, as opposed to commands, e.g., menu items? | Do the tools actually work as such, i.e., by extending users capabilities? Do the tools enable technical reasoning? | Which commands can be turned into tools? Are they related with physical tools? |

| Principles | |||

| Reification | Which concepts/commands are reified into interactive objects/tools? How can these objects be manipulated? | Are the reified concepts effective? Are the objects directly manipulable? | Which concepts/commands should be reified? Into which objects/tools? What manipulations should be available? |

| Polymorphism | Which commands/tools apply to objects of different types? Do they apply to collection of heterogeneous objects? | Should commands/tools apply to multiple object types? Which types? | How to make each instrument (more) polymorphic? How to create groups of heterogeneous objects? |

| Reuse | Which commands/objects can be reused? | Which commands/objects should be reusable? | How to make commands reusable (input reuse)? How to make objects reusable (output reuse)? |

Instruments transform user actions into operations on the objects of interest, and therefore act as modes: The same user actions can have different effects according to the instrument being used. While modes are often considered harmful [132p. 42][147], instruments reduce the risks of mode errors [122] because they are “ready-at-hand”, i.e., they are internalized by the user. Indeed, Larry Tesler, creator of cut-copy-paste and a strong opponent to modes, recognized that “modes can be good when they support a metaphor like picking up a brush” [147].

Tool-based interaction can make interfaces more intuitive by enabling technical reasoning, where users fetch the tool with the proper technical effect to get the result they seek. Most interactive software systems rely instead on procedural knowledge, which requires learning and remembering commands, instead of technical reasoning, which develops through practice and can be transferred from one context to another. We can find evidence of technical reasoning in the fact that users spontaneously develop creative and unexpected uses of digital instruments, as illustrated with StickyLines and Textlets.

At a larger scale, the notion of instrument challenges the notion of application that is a cornerstone of today's interactive environments [15]. Instruments should not be trapped into applications, and the content managed by applications should be more interoperable. Ideally, a StickyLine should be available not only for graphical editing, but also to align the icons in a file manager, the windows on the screen, or the margins in a word processor. Similarly, Textlets should be available anywhere there is text, such as the names of files or the result of an online search. The power of Instrumental Interaction therefore goes beyond incremental improvements to existing systems. Instead, it helps HCI researchers to deeply re-think interaction and create a new breed of interactive environments that are both more flexible and powerful yet simpler to use.

6 EXAMPLE 2: HUMAN–COMPUTER PARTNERSHIPS

The second example explores the concept of a Human–Computer Partnership, which operates at a different time scale than Instrumental Interaction. Instead of examining how to create individual instruments that accomplish specific functions such as alignment and selection, we shift the focus to the process of use over time. Users establish stable interaction patterns with time spans that range from seconds and minutes to months or even years. This process involves at least two inter-related phenomena: When faced with a new technology, users must discover its capabilities and adapt to its existing features. Yet users can also appropriate technology to meet specific needs or to generate personalized output. Effective Human–Computer Partnerships must account for both, which becomes even more complex when interacting with “intelligent” systems that learn from or influence the user's behavior. We need to consider how users and systems can share agency, while letting users retain control over the interaction. The challenge is how to create simple, easy-to-understand forms of interaction for novices, while providing an incrementally learnable path to achieve the flexibility and power of an expert.

6.1 Theoretical Foundations

Early computers took up entire rooms and required a small army of human technicians to program and maintain them. However, today's computer technology is so small and inexpensive that many people own multiple devices and actively construct personal software ecosystems over time [69]. Users are deeply influenced by the computational ecology they grow up with—Apple users tend to stick with Apple devices, PC users stick with Microsoft environments, and Linux users stick with Linux—deriving their skills and expectations from previous experience, which affects their future interactions. HCI's historical emphasis on point designs does not encourage researchers to consider how users and their corresponding software ecologies [56] co-develop. The notion of Human–Computer Partnerships builds on theory from the natural sciences to better inform this dynamic relationship.

6.1.1 Co-Adaptation in Evolutionary Biology. The biological phenomenon of Co-adaptation specifies how species are both affected by and affect the environments in which they live. The more familiar term co-evolution describes how the actions of certain species reciprocally affect each others’ behavior over millennia. Each species in a co-evolutionary relationship exerts selective pressures on the other, thus influencing how the other evolves. For example, anaerobic bacteria released oxygen into the atmosphere, which created a new, oxygenated habitat where aerobic bacteria evolved that can breathe oxygen [108]. More relevant to HCI are the changes that occur within an organism's lifetime. In The Origin of Species [52], Charles Darwin defined co-adaptation as “the usually beneficial relationship between different species”, where the “skills, habits, actions, or form of one species matches and uses the skills, habits, actions, or form of another species”. Darwin explains that co-adaptation refers to the interactions among individual organisms, whereas co-evolution refers to their evolutionary history.

The key idea here is that organisms do not simply adapt to their current environment and the other organisms within it. Rather, organisms also actively participate in the evolutionary process: Their actions adapt and modify the environment, such that they and other organisms within that environment evolve and change together. Co-adaptation emphasizes this ongoing, potentially asymmetrical process of mutual influence between organisms and the environment, where “survival of the fittest” is not simply a matter of an organism adapting to a changing environment, but also of it physically changing that environment to ensure its survival.

6.1.2 Co-Adaptation in HCI. The concept of Human–Computer Partnership builds upon Mackay's [109] observations of Co-adaptation with interactive systems, where users do not passively react to a technology's features, but also intentionally appropriate it to meet their needs. Some systems are designed to reduce this potential for customization, such as the purposefully limited options available on automated teller machines. However, other systems encourage exploration and innovation, such as the underlying structure of spreadsheets that supports far more innovative activities than simply adding up columns of numbers [119]. Co-adaptation takes the user's perspective, highlighting the dual relationship users establish with any interactive technology, where they discover how it works but also modify it for new purposes. When we take the system's perspective, especially with an intelligent system, we see a similar, although not identical, relationship, where some systems adapt to the user, e.g., smartphones that suggest words based on the user's earlier input [156, 157], while others adapt the users behavior, e.g., to teach users new skills. In summary, Co-adaptation offers a lens for examining the dual role of the user's interaction with technology, which shifts the focus from what Bill Buxton calls “getting the design right” to “getting the right design” [43].

6.2 Concepts of Human–Computer Partnerships

The concept of Co-adaptation [109] accounts for how users are continually learning from and modifying technology over time. It challenges the idea that interaction designers can maintain full control over “the” user experience, since any product may be experienced in ways that were never predicted [62]. Carroll [45] argues that users struggle with technology in a series of attempts to engage their prior knowledge and skill to accomplish meaningful results. This means that users should be viewed as active interpreters of their technology who not only assess how the system is “supposed” to be used, but also how to customize it for current or future needs. We argue that true Human–Computer Partnerships should help users:

- adapt to the system by supporting discovery of how they work; and

- adapt the system by supporting appropriability of its characteristics or by generating expressive output.

For example, creativity support applications include sets of tools with user-modifiable parameters. Non-experts should not only be able to discover which tools perform which functions, but, importantly, which of their needs can be addressed by which tools. The system might help users find or perform relevant commands, or reveal how it interpreted a particular command, or how to correct possible errors. Similarly, it is important to support user innovation by letting users take advantage of technical reasoning [125] to infer the system's properties, and re-purpose them to create novel solutions or different forms of output.

These two properties have clear correlates in the physical world. For example, chopsticks are tools designed for eating rice or noodles, whose physical properties—size, shape, weight, rigidity—are easily discerned. Although efficient use of chopsticks requires knowledge and practice, non-chopstick users can still learn through trial and error, although the process may be slow and messy. Over time, users discover and master the finer points of chopstick use, such as how to balance them, and which fingers should move or remain static. Experienced chopstick users can use technical reasoning to adapt objects with the appropriate properties to perform the same task. For example, a thin, round, rigid pencil is about the right length and will do in a pinch. Another form of adaptation is to take advantage of the tool's physical properties for new purposes, such as creating an impromptu drumstick or tying back long hair. Users can also adapt an object's properties for artistic expression, such as the artist who created a full-size portrait of Jackie Chan2 by assembling bundles of chopsticks of varying sizes into a large, tightly spaced grid to create the effect of pixels of varying shades of color intensity. The challenge is to make interactive systems similarly understandable and adaptable.

6.2.1 Reciprocal Co-Adaptation. The above examples involve interaction with “non-intelligent” objects that have no agency of their own. An artist can hone the edge of a pencil to achieve a particular effect, but the pencil will not suggest which edge will produce the best result. By contrast, intelligent systems can act independently, with their own agency. This suggests that such systems should be able to:

- adapt to users, by learning from their behavior; and

- adapt users, by changing their behavior.

Here, we see the same basic co-adaptive relationship as before, although the specific natures of human and system intelligence are very different. The third property asks the system to capture patterns of the user's behavior over time, so it can adjust its responses to meet the specific needs of that individual. However, this also implies that users should be able to discover what the system has inferred from their behavior, and change it if it is unwanted or incorrect. The fourth property asks the system to modify the user's behavior, ideally with the user's consent. This can have major benefits for the user, as when educational software [8] improves the user's skills. More controversially, some systems, e.g., persuasive technologies [61, 113], modify users’ behavior without their explicit knowledge or permission: The challenge remains how such systems can offer users “informed consent” [40].

To be effective, a Human–Computer Partnership with an intelligent system requires careful thought about how users and the system share agency, especially how to allocate control. Users reason about tasks differently than computers, and may or may not be aware of the system's agency, which makes intelligent tools significantly more complex to design and understand. We use the term Reciprocal Co-adaptation to describe how these four relationships cross-influence each other to form a Human–Computer Partnership.

6.3 Generative Principles for Human–Computer Partnerships

Successful Human–Computer Partnerships are co-adaptive: They allow users to successfully adapt to the specific characteristics of the system, and also adapt the system to meet their current needs and desires. This suggests following three generative principles:

6.3.1 Discoverability. When first approaching a new system, users usually have an idea of how it works and what they seek to accomplish with it. Even so, they need help figuring out where expected commands are located, and how to invoke them, as well as finding out which new commands or features are available. They need feedback about their previous actions to understand how the system interpreted them, and also feedforward, to discover how they can achieve particular effects. When we consider Reciprocal Co-adaptation, the system should also learn from the user's behavior, and use that information to inform the user or achieve specified goals.

6.3.2 Appropriability. When people try accomplish a particular task, they look for appropriate tools in the system. If they cannot find them, they should be able to modify or re-purpose commands or features to meet their needs. Ideally, as with physical systems, users should be able to re-purpose various properties of the interface to create new tools. This may be as simple as remapping gestures to new commands, or taking advantage of certain features to accomplish new tasks. When we consider Reciprocal Co-adaptation, the system should explicitly influence the user's behavior, either through teaching new skills or subtly modifying their actions.

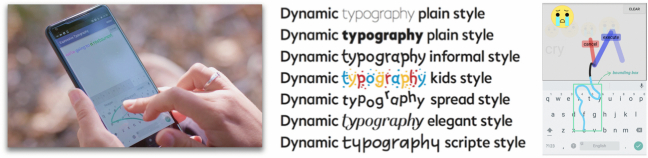

6.3.3 Expressivity. Each person generates individual variation every time they interact with technology. For example, physical handwriting reflects both unconscious variation, identifiable as an individual's handwriting style, and conscious choices, such as carefully lettering a love letter. Systems should be able to capture this input variation and transform it into correspondingly rich, expressive output, under the user's control. When we consider Reciprocal Co-adaptation, the system should reflect individual aspects of the user's behavior, such as generative design tools that create dynamic patterns guided by the user's input.

These three principles address different aspects of Co-adaptation in order to better support Human–Computer Partnerships, and can be summarized as follows:

- Discoverability: reveal how the system interprets the user's recent behavior (feedback) and which commands are now possible (feedforward);

- Appropriability: modify the system's behavior by customizing its characteristics for new purposes; and

- Expressivity: create rich, personalized output generated from individual user-controlled input variation.

6.3.4 Power in Combination. Each principle addresses users’ specific needs as they interact with the system over time. Although effective individually, as with Instrumental Interaction they achieve greater power when combined. Discoverable systems encourage exploration, which can lead to new insights and ideas for appropriation. Appropriable systems offer user-modifiable properties that users can manipulate to create more expressive commands or mappings between user input and rich output. Expressive systems reveal details of how the system interprets the user's behavior, which can lead to discovery of new features (Discoverability), that can, in turn, be modified for new purposes (Appropriability).

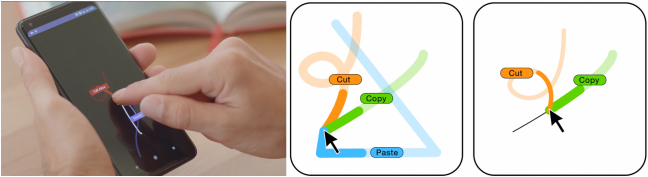

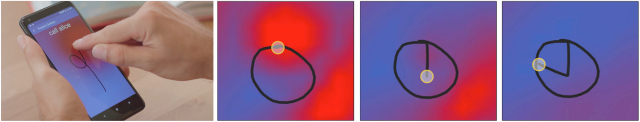

6.4 Applying the Generative Principles: COMMANDBOARD

Under the auspices of the European Research Council CREATIV project, we explored gesture-based interaction on mobile devices, with special emphasis on gesture typing [156]. We observed that, although today's smartphones are extremely powerful computers, they offer an impoverished form of screen-based interaction, restricted primarily to tapping, swiping, and pinching. We developed several systems, culminating in CommandBoard [2], that use gestures to increase users’ power of expression while preserving simplicity of interaction. We ran multiple formative studies, including interviews and participatory design workshops, and applied the principles of Discoverability, Appropriability, and Expressivity to generate novel design solutions.

6.4.1 Analytical and Critical Power. Gesture-based interaction allows users to express a huge vocabulary in a limited space, and advances in machine learning make it possible to reliably recognize a wide variety of gestures. Because the “nervous system has the capacity to form multiple long-term (> 24 hours) motor memories” [97], experts rarely forget these learned gestures, resulting in highly efficient interaction.