Article: Evaluating the Extension of Wall Displays with AR for Collaborative Work

R. James, A. Bezerianos, O. Chapuis. Evaluating the Extension of Wall Displays with AR for Collaborative Work. In Proc. ACM 2023 CHI Conference on Human Factors in Computing Systems, CHI 2023. April 2023, pages 1–17. DOI: 10.1145/3544548.3580752 - HAL: hal-04010673

Context

High-resolution Wall displays are platforms well suited for collaborative work as they can accommodate multiple people simultaneously and display large quantities of data. Despite these benefits, they are hard to move and expensive to reconfigure and extend. Eventually, they even run out of space to display the quantity of information to analyze.

As their high resolution is still unmatched by other collaborative platforms, we are considering another to extend them instead of replacing them. These displays are often placed in rooms with ample space in front of them to allow multiple users to move. So, while wall displays are not easy to physically reconfigure or extend, the physical space available in front of them provides a unique opportunity to extend them virtually, for example, through Augmented Reality (AR) headsets.

While others have suggested extending different types of displays with AR headsets, we are the first to study the impact of extending the shared space around the wall display during collaboration.

Contribution

Our contribution is two-fold: a system that extends a wall display using AR in terms of visual space and interaction support; and an empirical study that compares this extension with a wall display alone. Our results highlight that with the Wall+AR system, participants extensively used AR virtual surfaces in the physical space in front of the wall. Virtual surfaces are used for storing, discarding, and presenting data. Surprisingly, participants often use the virtual surfaces as their main interactive workspace. However, we observed that adding AR to a wall display creates interaction overhead, such as physical and mental demand. Nevertheless, it also brings a real benefit over using the wall alone: the Wall+AR system is preferred and found to be more enjoyable and efficient than the wall alone, and we did not measure any loss in performance despite the interaction overhead.

Impact

Our work provides fundamental understanding of the fields of collaboration and the field of AR. It is the first work to study the concrete trade-offs of combining two collaborative technologies in a collaborative context. It was published in the flagship HCI venue, highlighting the quality of the contribution.

For bibliometrics, see Google Scholar.

Article: ARPads: Mid-air Indirect Input for Augmented Reality

E. Brasier , O. Chapuis , N. Ferey, J. Vezien, C. Appert. ARPads: Mid-air Indirect Input for Augmented Reality. In Proc. IEEE International Symposium on Mixed and Augmented Reality, ISMAR 20. October 2020, pages 332-343. DOI: 10.1109/ISMAR50242.2020.00060 - HAL: hal-02915795

Context

We chose ARPads for three reasons: 1) it is the first contribution that ILDA made to a novel domain of interest -- Augmented Reality (AR) ; 2) ARPads illustrates the typical contributions that ILDA makes to propose novel forms of input for interactive systems ; and 3) ARPads results from a collaborative effort with the LISN VENISE team.

Contribution

In terms of contribution, ARPads introduces the concept of indirect input for AR. It challenges the conventional wisdom that direct input is the only way to manipulate elements in AR, demonstrating that indirect input can be as efficient while alleviating the physical demand and fatigue associated with prolonged direct interaction.

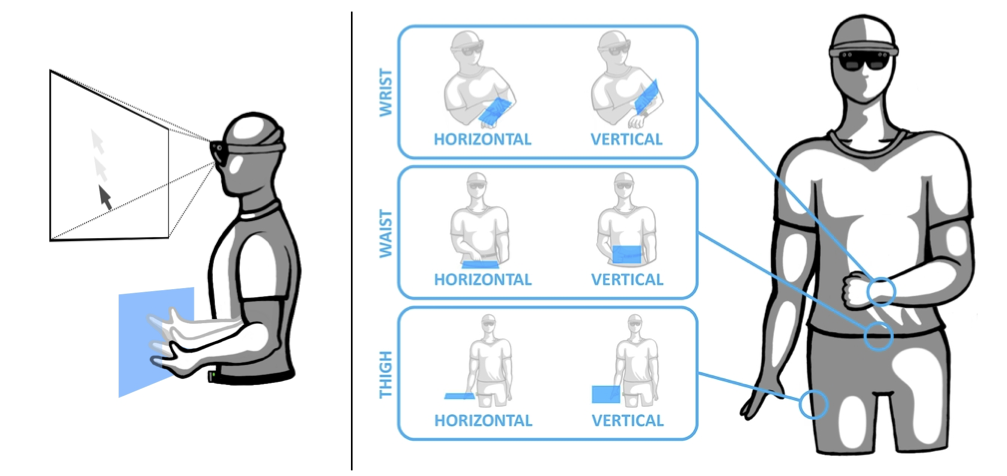

We investigate a design space for ARPads, which takes into account the position of the pad relative to the user's body, and the orientation of the pad relative to that of the headset screen. Our observations support ARPads' performance in comparison with direct input baseline techniques, and allow us to derive guidelines for designing efficient and comfortable ARPads.

Impact

ARPads brings fundamental knowledge to the field of AR. It has been published in the premier conference in AR, recognizing the quality of the contribution and giving it a good visibility. It paves the way for the design of more usable and comfortable AR systems.

For bibliometrics, see Google Scholar.

Article: A Comparison of Visualizations for Identifying Correlation over Space and Time

V. Peña-Araya, E. Pietriga, A. Bezerianos. A Comparison of Visualizations for Identifying Correlation over Space and Time. In IEEE Transactions on Visualization and Computer Graphics 26(1) (TVCG/InfoVis '19). August 2019, pages 375-385. DOI: 10.1109/TVCG.2019.2934807 - HAL: hal-02320617

Datasets combining spatial and temporal dimensions have become central to ILDA’s research Axis 3 – Interacting with Diverse Data. This project, published in the premier conference on Information Visualization (IEEE VIS), provides a comprehensive investigation and evaluation of interactive visualizations that support the identification of correlations in multivariate data.

Context

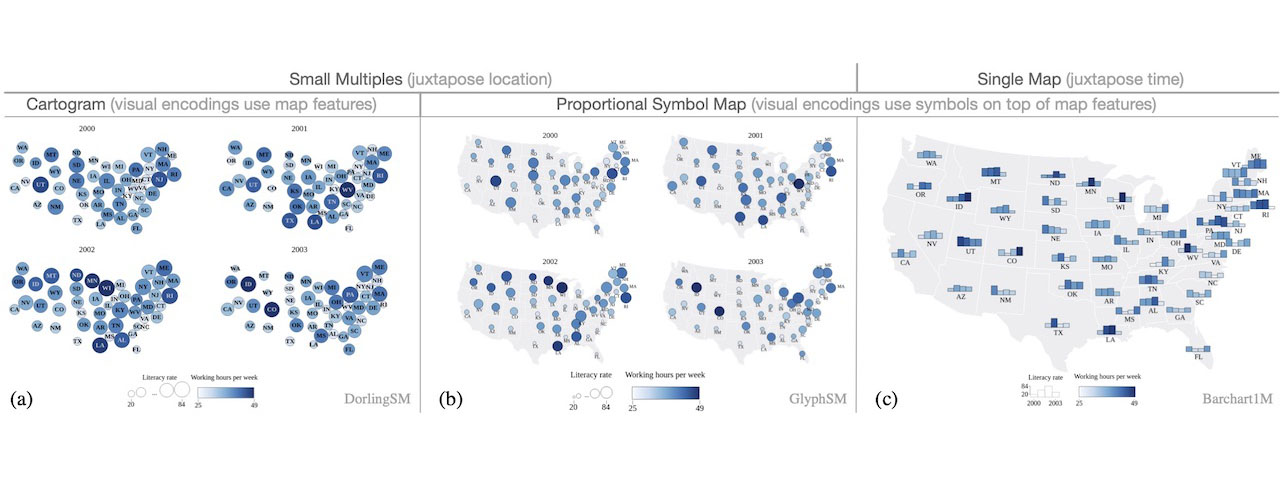

Understanding phenomena often requires looking at how multiple variable are correlated, and how they evolve over space and time. For instance, policy makers in education can be interested in understanding how the increase of literacy rate is correlated with employment rates or monthly salary. Allowing effective visual representations of such relationships and their evolution over time can be key to extract important patterns and trends.

We chose this project because (1) the analysis of correlations over space and time is essencial in many domains; (2) it is a representative project of the type of contribution we do in the Visualization area.

Contribution

This project contributes recommendations about how to design visualizations that show correlations between variables over space and time. More specifically, it informs visualization designers about the trade offs between representations that juxtapose maps and those that juxtapose time. This can guide them to select the appropriate visualization depending of the amount of geographical locations and time intervals they are expecting to represent.

Impact

This project contributes with fundamental knowledge in human-perception and visualization. This is recognized in the fact that (1) is has been published in the top conference of the area (IEEE VIS), and (2) that it was included latest survey about perception-based visualization studies, published TVCG (Quadri et al. 2021), which reunites the latest knowledge of human perception studies in the domain.

For bibliometrics, see Google Scholar.

Cherenkov Telescope Array UIs

E. Pietriga, L. David, and D. Lebout. Cherenkov Telescope Array Operations Monitoring and Control.

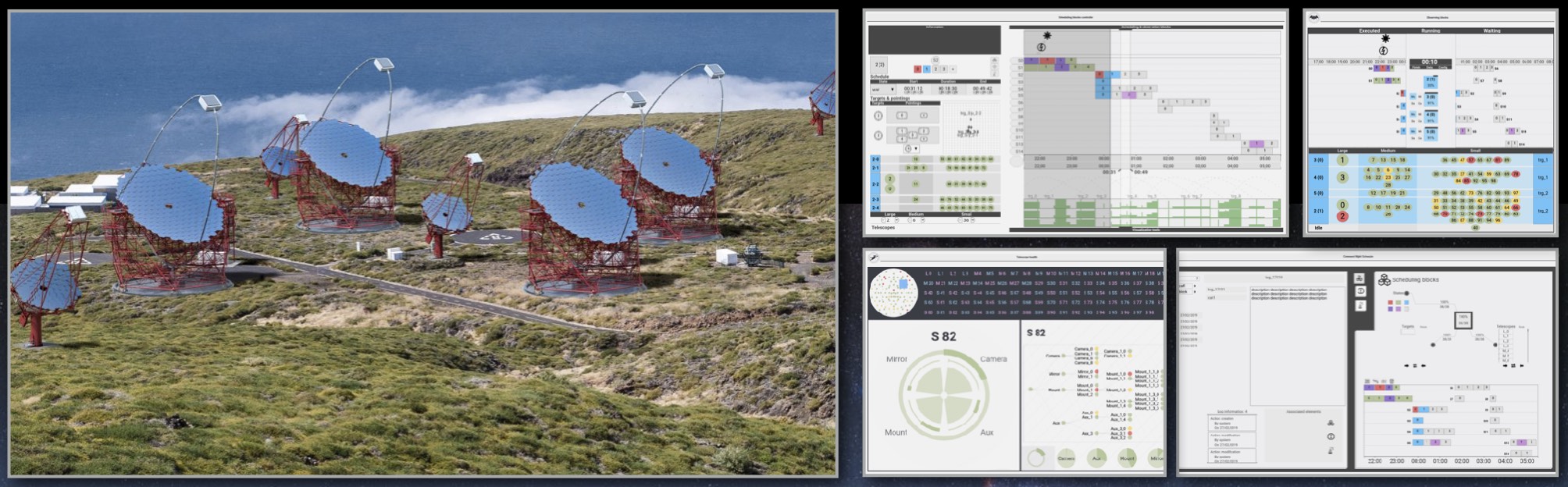

This represents a particularly successful industrial transfer activity. ILDA designs and develops interactive visualization components for use in a mission-critical context: the operations monitoring and control center of a state-of-the-art telescope together with DESY in Germany: the CTA observatory (Cherenkov Telescope Array).

Context

The CTA observatory is aimed at performing scientific research in the field of astronomy and high-energy physics (gamma-ray observations). Operations monitoring and control are activities performed by operators, engineers and astronomers who work in the observatory's control room. The goal of this transfer project, called ACADA-HMI, is to design user interfaces composed primarily of advanced interactive visualizations for this particular target audience, aiming to improve situation awareness and help troubleshoot the system when problems occur (warnings, alarms, unexpected astronomical events such as gamma-ray bursts signalled by other observing facilities around the world).

Contribution

Our contribution is about both the design and software development of user interfaces for the CTA control room. ACADA-HMI is the front-end component of a much larger software project called ACADA (Array Control and Data Acquisition System). Overall, ACADA's development involves approximately 40 people organized in 28 sub-projects. ACADA-HMI -- the only part ILDA is involved in -- currently consists of more than 100kLoC. For the UI design part we rely on HCI research methodologies, most particularly human-centered design methods such as low-fidelity prototyping and participatory design. We rely on state-of-the-art visualization techniques for the representation of data based on our strong expertise in this area.

Stringent rules are enforced regarding coding practices to ensure a high-level of code quality. A testing methodology has been defined to enable the semi-automatic checking of the display of, and interaction with, UI components.

ILDA leads the human-centered design activities. Emmanuel Pietriga is Principal Investigator. Development work on the ILDA side is handled entirely by an Inria research engineer: Dylan Lebout from 2017 to 2022, Ludovic David from 2023 to 2026.

Impact

The collaboration started informally in 2015. The first contract between Inria and DESY was signed in 2017, then extended to Oct 2022, and now Oct 2026. As the observatory is under construction, we do not yet have feedback from actual end-users since the control rooms do not exist yet. The software is being tested with hardware simulators but has obviously not been deployed. However the renewals of contract provide evidence that CTA is satisfied with the collaboration.

This collaboration with DESY has also led to multiple publications that we have co-authored, in the astro-engineering community:

- Bernhard Lopez et al. Methodology for the integration of the Array Control and Data Acquisition System with array elements of the Cherenkov Telescope Array Observatory. In SPIE 13101: Proceedings of the Astronomical Telescopes and Instrumentation conference: Software and Cyberinfrastructure for Astronomy VIII, SPIE, 2024. To appear. https://spie.org/astronomical-telescopes-instrumentation/presentation/Methodology-for-the-integration-of-the-Array-Control-and-Data/13101-16

- Oya Igor & et al. The first release of the Cherenkov Telescope Array Observatory Array Control and Data Acquisition software. In SPIE 13101: Proceedings of the Astronomical Telescopes and Instrumentation conference: Software and Cyberinfrastructure for Astronomy VIII, SPIE, 2024. To appear. https://spie.org/astronomical-telescopes-instrumentation/presentation/The-first-release-of-the-Cherenkov-Telescope-Array-Observatory-Array/13101-49

- Oya I., Antolini E., Füßling M., Hinton J. A., Mitchell A., Schlenstedt S., Baroncelli L., Bulgarelli A., Conforti V., Parmiggiani N., Carosi A., Jacquemier J., Maurin G., Colomé J., Hoischen C., Lebout D., Lyard E., Walter R., Melkumyan D., Mosshammer K., Sadeh I., Schmidt T., Wegner P., Schwarz J. & Tosti G. The Array Control and Data Acquisition System of the Cherenkov Telescope Array. In Proceedings of the 17th International Conference on Accelerator and Large Experimental Control Systems (ICALEPCS), 2019. https://inria.hal.science/hal-02421340

- Sadeh Iftach, Oya Igor, Schwarz Joseph & Pietriga Emmanuel. The Graphical User Interface of the Operator of the Cherenkov Telescope Array. In Proceedings of the 16th International Conference on Accelerator and Large Experimental Control Systems (ICALEPCS), 2017. https://arxiv.org/abs/1608.03595

- Sadeh Iftach, Oya Igor, Schwarz Joseph & Pietriga Emmanuel. Prototyping the Graphical User Interface for the Operator of the Cherenkov Telescope Array. In SPIE 9913: Proceedings of the Astronomical Telescopes and Instrumentation conference: Software and Cyberinfrastructure for Astronomy III, SPIE, 2016. https://arxiv.org/abs/1710.07117