This page lists the scientific publications about WILD.

Mid-air pan and zoom - ACM CHI 2011

Mid-air Pan-and-Zoom on Wall-sized Displays

Proc. ACM Human Factors in Computing Systems (CHI'2011)

ACM

pp 177-186

Best Paper Award.

PDF

ACM DL

HAL

Video

Slides

Very-high-resolution wall-sized displays offer new opportunities for interacting with large data sets. While pointing on this type of display has been studied extensively, higher-level, more complex tasks such as pan-zoom navigation have received little attention. It thus remains unclear which techniques are best suited to perform multiscale navigation in these environments. Building upon empirical data gathered from studies of pan-and-zoom on desktop computers and studies of remote pointing, we identified three key factors for the design of mid-air pan-and-zoom techniques: uni- vs. bi- manual interaction, linear vs. circular movements, and level of guidance to accomplish the gestures in mid-air. After an extensive phase of iterative design and pilot testing, we ran a controlled experiment aimed at better understanding the influence of these factors on task performance. Significant effects were obtained for all three factors: bimanual interaction, linear gestures and a high level of guidance resulted in significantly improved performance. Moreover, the interaction effects among some of the dimensions suggest possible combinations for more complex, real-world tasks.

Substance - ACM CHI 2011

Shared Substance: Developing Flexible Multi-Surface Applications

Proc. ACM Human Factors in Computing Systems (CHI'2011)

ACM

pp 3383-3392

PDF

ACM DL

Video

Slides

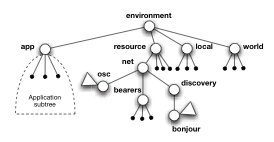

This paper presents a novel middleware for developing flexible interactive multi-surface applications. Using a scenario-based approach, we identify the requirements for this type of applications. We then introduce Substance, a data-oriented framework that decouples functionality from data, and Shared Substance, a middleware implemented in Substance that provides powerful sharing abstractions. We describe our implementation of two applications with Shared Substance and discuss the insights gained from these experiments. Our finding is that the combination of a data-oriented programming model with middleware support for sharing data and functionality provides a flexible, robust solution with low viscosity at both design-time and run-time.

jBricks - ACM EICS 2011

Rapid Development of User Interfaces on Cluster-Driven Wall Displays with jBricks

Proc. SIGCHI Symposium on Engineering Interactive Computing Systems (EICS 2011)

ACM

pp 185-190

PDF

ACM DL

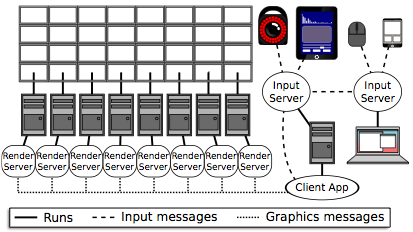

Research on cluster-driven wall displays has mostly focused on techniques for parallel rendering of complex 3D models. There has been comparatively little research effort dedicated to other types of graphics and to the software engineering issues that arise when prototyping novel interaction techniques or developing full-featured applications for such displays. We present jBricks, a Java framework that provides a high-quality 2D graphics rendering engine coupled with a versatile input configuration module, for the exploratory prototyping of interaction techniques and rapid development of post-WIMP applications in this type of environment.

Lessons from WILD - IHM 2011

Lessons Learned from the WILD Room, a Multisurface Interactive Environment

Proc. Conférence Francophone sur l'Interaction Homme-Machine (IHM'2011)

ACM

pp 105-112

PDF

ACM DL

Slides (in French)

Creating the next generation of interactive systems requires experimental platforms that let us explore novel forms of interaction in real settings. This article describes WILD, a high-performance environment for exploring multi-surface interaction that includes an ultra-high resolution wall display, a multitouch table, a motion tracking system and various mobile devices. The article describes the integrative research approach of the project and the lessons learned with respect to hardware, participatory design, interaction techniques and software engineering.

IEEE Computer - April 2012

Multisurface Interaction in the WILD Room

IEEE Computer special issue on Interaction Beyond the Keyboard

IEEE

pp 48-56

PDF (author version)

Video

The WILD (wall-sized interaction with large datasets) room serves as a testbed for exploring the next generation of interactive systems by distributing interaction across diverse computing devices, enabling multiple users to easily and seamlessly create, share, and manipulate digital content.

GridScape - AVI 2012

Looking behind Bezels: French Windows for Wall Displays Proc. Advanced Visual Interfaces (AVI'2012) ACM page 124-131 PDF ACM DL

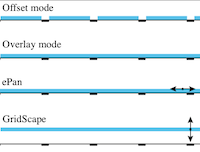

Using tiled monitors to build wall-sized displays has multiple advantages: higher pixel density, simpler setup and easier calibration. However, the resulting display walls suffer from the visual discontinuity caused by the bezels that frame each monitor. To avoid introducing distortion, the image has to be rendered as if some pixels were drawn behind the bezels. In turn, this raises the issue that a non-negligible part of the rendered image, that might contain important information, is visually occluded. We propose to draw upon the analogy to french windows that is often used to describe this approach, and make the display really behave as if the visualization were observed through a french window. We present and evaluate two interaction techniques that let users reveal content hidden behind bezels. ePan enables users to offset the entire image through explicit touch gestures. GridScape adopts a more implicit approach: it makes the grid formed by bezels act like a true french window using head tracking to simulate motion parallax, adapting to users' physical movements in front of the display. The two techniques work for both single- and multiple-user contexts.

High-precision pointing - CHI 2013

High-precision pointing on large wall displays using small handheld devices Proc. Human Factors in Computing Systems (CHI 2013) ACM pp 831-840 PDF ACM DL Video

Rich interaction with high-resolution wall displays is not limited to remotely pointing at targets. Other relevant types of interaction include virtual navigation, text entry, and direct manipulation of control widgets. However, most techniques for remotely acquiring targets with high precision have studied remote pointing in isolation, focusing on pointing efficiency and ignoring the need to support these other types of interaction. We investigate high-precision pointing techniques capable of acquiring targets as small as 4 millimeters on a 5.5 meters wide display while leaving up to 93 % of a typical tablet device's screen space available for task-specific widgets. We compare these techniques to state-of-the-art distant pointing techniques and show that two of our techniques, a purely relative one and one that uses head orientation, perform as well or better than the best pointing-only input techniques while using a fraction of the interaction resources.

Smarties - CHI 2014

Smarties: An Input System for Wall Display Development Proc. Human Factors in Computing Systems (CHI 2014) ACM page 2763-2772 PDF ACM DL Video

Wall-sized displays can support data visualization and collab- oration, but making them interactive is challenging. Smarties allows wall application developers to easily add interactive support to their collaborative applications. It consists of an interface running on touch mobile devices for input, a commu- nication protocol between devices and the wall, and a library that implements the protocol and handles synchronization, locking and input conflicts. The library presents the input as an event loop with callback functions. Each touch mobile has multiple cursor controllers, each associated with keyboards, widgets and clipboards. These controllers can be assigned to specific tasks, are persistent in nature, and can be shared by multiple collaborating users for sharing work. They can con- trol simple cursors on the wall application, or specific content (objects or groups of them). The types of associated wid- gets are decided by the wall application, making the mobile interface customizable by the wall application it connects to.

Classification task - CHI 2014

Effects of Display Size and Navigation Type on a Classification Task Proc. Human Factors in Computing Systems (CHI 2014) ACM page 4147-4156 PDF ACM DL Video

The advent of ultra-high resolution wall-size displays and their use for complex tasks require a more systematic analysis and deeper understanding of their advantages and drawbacks compared with desktop monitors. While previous work has mostly addressed search, visualization and sense-making tasks, we have designed an abstract classification task that involves explicit data manipulation. Based on our observations of real uses of a wall display, this task represents a large category of applications. We report on a controlled experiment that uses this task to compare physical navigation in front of a wall-size display with virtual navigation using pan-and-zoom on the desktop. Our main finding is a robust interaction effect between display type and task difficulty: while the desktop can be faster than the wall for simple tasks, the wall gains a sizable advantage as the task becomes more difficult. A follow-up study shows that other desktop techniques (overview+detail, lens) do not perform better than pan-and-zoom and are therefore slower than the wall for difficult tasks.

WILD - publications

WILD - publications